Sound localisation

Posted on 2007/01/10 - 20:30I'm feeling obliged to write something again here, since it's been a few days since the last post. I've had a few interesting days partly due to some tweaking of the Intercab project and some hardware juggling because the neighbours' computer died a horrible dead and it was my (paid, mind you) duty to bring it back to life. The most interesting thing however was an article in the current special edition of Sciantific American where I wholly disagreed with.

The problem is that professor Masakazu Konishi of the California Institute of Technology proposes and dissects the systems involved for determining the location of a sound in barn owls. His theory is based on an old model originally sketched out in 1948 by Lloyd A. Jeffress (this document contains the general idea) which is still assumed to be correct. The ear however already sends signals regarding the current phase of the sound wave (if I understand all articles read so far correctly) to the brain, and this model needs on top of that also something called delay lines. These delay lines would slow down the action potential of neurons, and even then this model doesn't wholly fit the actual observed biological behavior of the brain. So, I've been in touch with M. Konishi in the hope of getting more details and a better understanding. My last mail (partially) contained the following few paragraphs;

My trouble with grasping the functioning of delay lines is that I've never heard of them before, and can't find any articles explicitly explaining their biological mechanisms. I tend to believe right now that Jeffress' model is incorrect because it requires these lines which aren't proven to exist beyond doubt and are an extra requirement needed for the model to work. Even articles by your hand unfortunately don't confirm or deny the existence of these lines.

I'm referring to [1] where you seem to suggest that the phase angle changes along the length of an axon and that therefore this axon is behaving like a delay line; "Magnocellular afferents phase-lock, discharging at a particular phase angle of a sinusoidal stimulus (14). Shifts in the phase angle indicate changes in conduction time." but it's (as far as I know) impossible for a discharging axon to influence the angle of it's action potential. Also keep in mind that the actual transfer of the action potential between neurons is done chemically, where no angle whatsoever is present. This is further enforced by a line in the discussion section stating "...the probability of recording from more than one site along a single axon is neglible." giving room for the suggestion that these potentials occurring at the time that they're representing a particular phase angle aren't originating from the same neuron.

For a non-expert as myself it makes much more sense that different neurons fire at different phase angles, and that these are mapped against signals from the other ear. When there's a difference in the angle at a particular time, it means that the sound didn't enter both ears simultaneously and the number of degrees in difference means which ear is closer to the sound, and exactly by how much.

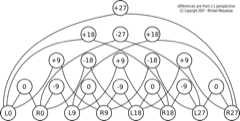

For clarity I drew a little map [2] matching 0, 90, 180 and 270 degrees for two ears. The offset in degrees is directly correlated to the location of the sound, and I've filled in the approximate offset between the two ears required to let a particular neuron fire. At the bottom you can see axons from the left and right ear. If L0 (0 degrees phase angle left ear) would fire at the same time as R18 (180 degrees phase angle right ear) the neuron which is connected to these two will fire giving an offset of +180 degrees. In other words; the object is at the beings' right.

I know, I'm kicking against an established theory here. But maybe I'm right and they're wrong. Maybe I'm going to be proved wrong, which is even better. For example, I'm still not absolutely sure that the neurons from the ear actually provide the brain with this phase information. In either case, it's hugely interesting and hopefully I can soon tie some of this stuff together with perennial and get some cool stuff. We'll soon see, err, I mean, hear!

[: wacco :]

[1]: http://www.pubmedcentral.nih.gov/picrender.fcgi?artid=282419&blobtype=pdf

[2]:

Visit the Project VGA Page

Visit the Project VGA Page